In 2013, Mikolov et al. (2013) published a paper showing that complicated semantic analogy problems could be solved simply by adding and subtracting vectors learned with a neural network. Since then, there has been some more investigation into what is actually behind this method, and also some suggested improvements. This post is a summary/discussion of the paper “Linguistic Regularities in Sparse and Explicit Word Representations“, by Omer Levy and Yoav Goldberg, published at ACL 2014.

The Task

The task under consideration is analogy recovery. These are questions in the form:

a is to b as c is to d

In a usual setting, the system is given words a, b, c, and it needs to find d. For example:

‘apple’ is to ‘apples’ as ‘car’ is to ?

where the correct answer is ‘cars’. Or the well-known example:

‘man’ is to ‘woman’ as ‘king’ is to ?

where the desired answer is ‘queen’.

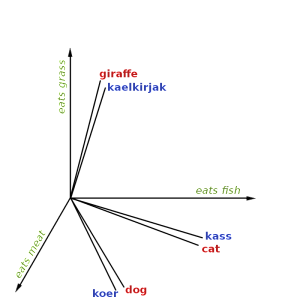

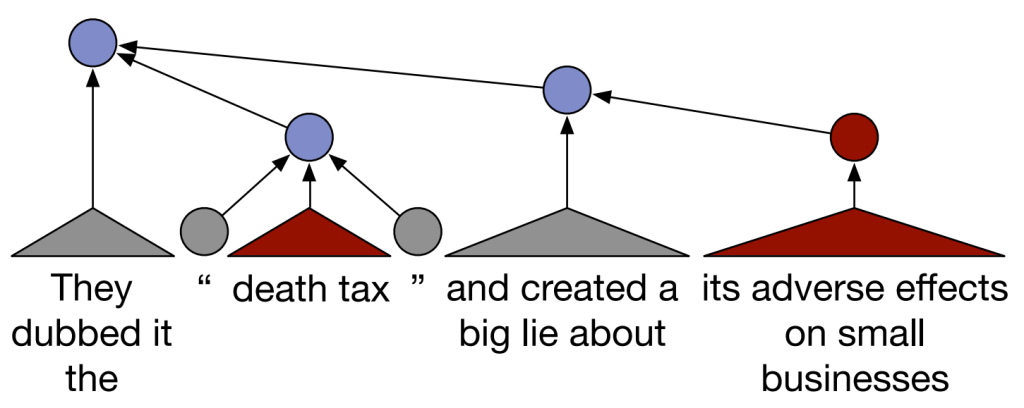

While methods such as relation extraction would also be completely reasonable approaches to this problem, the research is mainly focused on solving it by using vector similarity methods. This means we create vector representations for each of the words, and then use their positions in the high-dimensional feature space to determine what the missing word should be.